Hi everyone!

I'm currently trying to write an article about $$$\lambda$$$-optimization in dynamic programming, commonly known as "aliens trick". While writing it, I stumbled upon a fact which, I believe, is a somewhat common knowledge, but is rarely written out and proved explicitly. This fact is that we sometimes can repeatedly use ternary search when we need to optimize a multi-dimensional function.

Thanks to

- mango_lassi for a useful discussion on this and for counter-example on integer ternary search!

- Neodym for useful comments and remarks about the article structure.

$$$1$$$-dimensional search

Def. 1. A function $$$f : X \to \mathbb R$$$, where $$$X \subset \mathbb R$$$, is called unimodal if there is a segment $$$[L, R]$$$ such that $$$f(x)$$$ strictly decreases on $$$(-\infty, L] \cap X$$$, strictly increases on $$$x \in [R,+\infty) \cap X$$$ and is equal to its global infimum on $$$x \in [L, R] \cap X$$$.

Ternary search is a family of similar algorithms that allow to estimate the infimum value of a unimodal function $$$f: X \to \mathbb R$$$ on a continuous segment of arguments by splitting it into $$$3$$$ roughly equal parts and reducing the search to at most $$$2$$$ of these parts.

The algorithm takes a segment $$$[l,r]$$$ as an input and chooses two points $$$x_1, x_2 \in X$$$ such that $$$l \leq x_1 < x_2 \leq r$$$. If $$$f(x_1) < f(x_2)$$$, the segment is reduced to $$$[l, x_2]$$$ and otherwise it's reduced to $$$[x_1, r]$$$.

The algorithm stops when a certain condition is met, typically when $$$r-l \leq \varepsilon$$$ or when a certain number of iterations is met.

Multi-dimensional unimodality

Def. 2. A set $$$X \subset \mathbb R^c$$$ is called convex if for all $$$x_1, x_2 \in X$$$ and all $$$\lambda \in [0, 1]$$$ it contains $$$x_1 + \lambda[x_2 - x_1]$$$.

Let $$$X \subset \mathbb R^c$$$ be a convex set. There are two most common ways to generalize unimodality on higher dimensions.

Def. 3. A function $$$f: X \to \mathbb R$$$ is called convex if for any $$$x_1, x_2 \in X$$$ and any $$$\lambda \in [0, 1]$$$, it holds that

Def. 4. A function $$$f: X \to \mathbb R$$$ is called strictly quasi-convex if for any $$$x_1, x_2 \in X$$$ and any $$$\lambda \in (0, 1)$$$, it holds that

It is possible to prove that a $$$1$$$-dimensional convex or strictly quasi-convex function is also unimodal. Moreover, all unimodal functions for which $$$L=R$$$ (infimal segment has a zero length) are also strictly quasi-convex, so these classes are almost equivalent on $$$\mathbb R$$$.

However, convexity and strict quasi-convexity do not imply one another. For example, $$$\max(1, |x|)$$$ is convex, but not strictly quasi-convex. On the other hand, $$$\sqrt{|x|}$$$ is strictly quasi-convex, but not convex. Ternary search would work on both of these functions.

Note that $$$f(x)$$$ must be strictly decreasing until it reaches minimum value and after the minimum value segment it must be strictly increasing for this to work. Otherwise it would be impossible to choose a correct segment reduction for $$$f(x_1)=f(x_2)$$$. This also means that non-strict quasi-convexity is not enough, as $$$\text{floor}(|x|)$$$ is quasi-convex and has segments of equal values other than $$$f(x^*)$$$.

Multi-dimensional search

Consider the problem of estimating the infimum of $$$f : X \times Y \to \mathbb R$$$. We can notice that

where

Def. 5. Function $$$h(x)$$$ defined above is called an infimal projection of $$$f(x, y)$$$ on $$$X$$$.

For $$$X \subset \mathbb R$$$ and $$$Y \subset \mathbb R^{c-1}$$$ it allows to reduce the minimization of $$$c$$$-dimensional $$$f(x, y)$$$ to the minimization of $$$1$$$-dimensional function $$$h(x)$$$, to compute which one needs to solve the minimization of $$$(c-1)$$$-dimensional function $$$f_x(y)$$$. The similar reduction can be applied to $$$f_x(y)$$$ to obtain a nested sequence of $$$1$$$-dimensional minimization problems.

For $$$c$$$-dimensional function $$$f(x_1, \dots, x_n)$$$ this reduction would look as follows:

where

Possible approach to this problem would be to apply a ternary search on each reduction level, so that the ternary search on level $$$k$$$

- minimizes $$$f(x_1, \dots, x_n)$$$ directly with fixed $$$x_1, \dots, x_{n-1}$$$ that are given from the levels above when $$$k=n$$$,

- passes $$$x_1, \dots, x_{k-1}$$$ and $$$x_k$$$ to the ternary search on the level below and uses its result to compute $$$h_{x_1,\dots, x_{k-1}}(x_k)$$$ when $$$k<n$$$.

Sometimes, it is possible to prove directly that all functions $$$h_{x_1 \dots x_k}(x_{k+1})$$$ are unimodal.

Another possible way when the domain of $$$f$$$ is a Cartesian product of convex sets is to prove that $$$f$$$ as a whole is convex or strictly quasi-convex, which would imply that all one-dimensional functions in the reduction preserve this property.

We will focus on the very first transition from $$$f(x, y)$$$ to $$$h(x)$$$ and $$$f_x(y)$$$ where $$$X\subset \mathbb R$$$ and $$$Y \subset \mathbb R^{c-1}$$$. We will assume that $$$f(x, y)$$$ is strictly quasi-convex and will prove that $$$h(x)$$$ and $$$f_x(y)$$$ are also strictly quasi-convex, the rest will follow from mathematical induction.

Proposition 1. Let $$$ f : X \times Y \to \mathbb R$$$ be a strictly quasi-convex function. Then $$$f_x(y) = f(x, y)$$$ and $$$h(x) = \inf_y f(x, y)$$$ are strictly quasi-convex as well.

Proof. $$$f_x$$$ is quasi-convex by definition, as it is equal to $$$f(x, y)$$$ on the interval between $$$(x,\inf Y)$$$ and $$$(x, \sup Y)$$$.

Let $$$y_1$$$ and $$$y_2$$$ to be optimal $$$y$$$ for $$$h(x_1)$$$ and $$$h(x_2)$$$. The interval between $$$(x_1, y_1)$$$ and $$$(x_2, y_2)$$$ contains a point $$$(x,y)$$$ such that

In a very similar way it is possible to prove that $$$h(x)$$$ and $$$f_x(y)$$$ are convex when $$$f(x, y)$$$ is.

Proposition 2. Let $$$ f : X \times Y \to \mathbb R$$$ be a convex function. Then $$$f_x(y) = f(x, y)$$$ and $$$h(x) = \inf_y f(x, y)$$$ are convex as well.

Proof. $$$f_x$$$ is convex by definition. Let $$$x_1, x_2 \in X$$$ and let $$$y_1$$$ and $$$y_2$$$ to be optimal $$$y$$$ for $$$h(x_1)$$$ and $$$h(x_2)$$$.

The interval between $$$(x_1, y_1)$$$ and $$$(x_2, y_2)$$$ contains a point $$$(x,y)=(x_1,y_1)+\lambda[(x_2,y_2)-(x_1,y_1)]$$$ such that

Perhaps, more intuitive way to see this is to notice that the epigraph of $$$h(x)$$$ is a projection on the $$$xOz$$$ plane of the epigraph of $$$f(x, y)$$$.

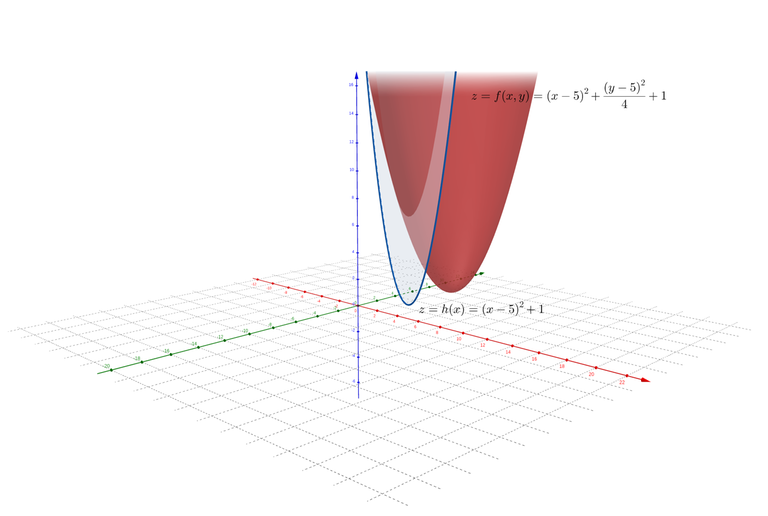

Below is the illustration. For $$$f(x, y) = (x-5)^2+ \frac{(y-5)^2}{4}+1$$$, its infimal projection is $$$h(x) = (x-5)^2+1$$$.

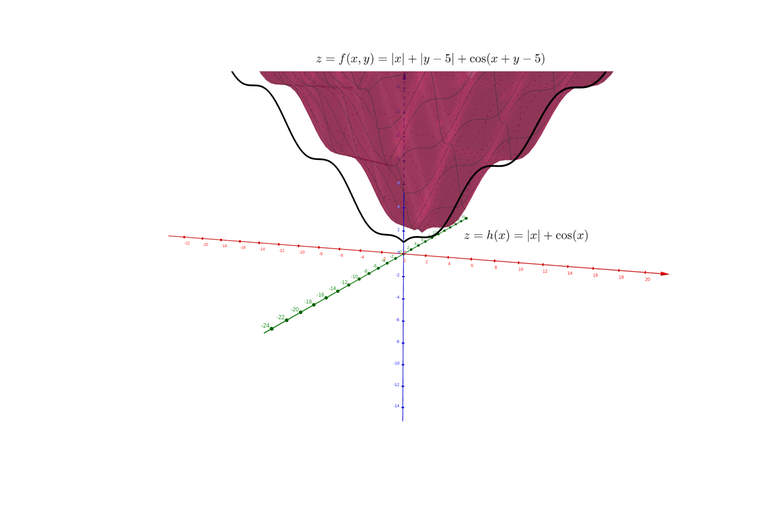

Another illustration with a non-convex function: $$$f(x, y)=|x|+|y-5|+\cos(x+y-5)$$$, $$$h(x)=|x|+\cos x$$$.

Caveat

Convexity and strict quasi-convexity of $$$f$$$ on $$$\mathbb R^2$$$ don't necessarily imply that ternary search would work on $$$\mathbb Z^2$$$. Let

The function $$$f$$$ is convex and strictly quasi-convex on $$$\mathbb R^2$$$. However, in small integers $$$h(2k) \approx 0$$$ and $$$h(2k+1) \approx \frac{1}{4}$$$, thus the function is neither convex nor uni-modal. It doesn't mean that ternary search is never applicable to integers, however you would need to be extra careful and prove directly that $$$h$$$ and $$$f_x$$$ are uni-modal to use it.

Open questions

- Are there any problems that are solvable with nested ternary search over integers or any other discrete set?

- Are there any better criterion for ternary search to be applicable in a discrete case, other than proving uni-modality of $$$h$$$ and $$$f_x$$$?

- What would be the most meaningful generalization of binary search on multi-dimensional case? Quasi-linear functions?

You should check out this blog post. I don't remember all the details, but I remember adding this comment at the bottom which described some nuances that came up in the two-dimensional binary search case.

Already on my list for the upcoming article, thanks!

I added some structure to the article, placing definitions and main proposition in separate blocks. Also added some more details, hope it's easier to read now.

thank you and happy new year. waiting for your alien trick blog.

Although strict quasi-convexity on $$$\mathbb{R}^2$$$ is not enough to make nested ternary search work on $$$\mathbb{Z}^2$$$, it is enough to make ternary search work on $$$\mathbb{Z} \times \mathbb{R}$$$, since that doesn't leave any gaps between vertically-adjacent lattice points for convex interpolations of points of interest to slip into. So, if $$$f : \mathbb{R}^2 \to \mathbb{R}$$$ is strictly quasi-convex and that for each integer $$$x$$$ there exists some integer $$$y$$$ which minimizes $$$f(x, y)$$$ among $$$y \in \mathbb{R}$$$, then ternary search will work for $$$f$$$ restricted to $$$\mathbb{Z}^2$$$ as well.

First of all, thanks for this blog ! I just have a small question about why we are using ternary search.

Can't we use binary search ? If $$$f$$$ is unimodal then we can binary search on the infimum and compare $$$f(\frac{l + r}{2})$$$ with $$$f(l)$$$ and $$$f(r)$$$ to know on which side of the slope $$$\frac{l + r}{2}$$$ lies. Please, can you tell me what I'm doing wrong ?

Also, I think that it's always possible to do only one ternary search even if we have multiple parameters. Say we have two parameters $$$x$$$ and $$$y$$$ and we know that $$$x \leq 100$$$. We could define $$$z = 100 \cdot x + y$$$. This way by knowing $$$z$$$ we can deduce $$$x$$$ and $$$y$$$. To keep the unimodality of the new function we might need to consider $$$-y$$$ in case the function is increasing and then decreasing for the parameter $$$y$$$.

Thanks by advance for your help